AI Against Humanity

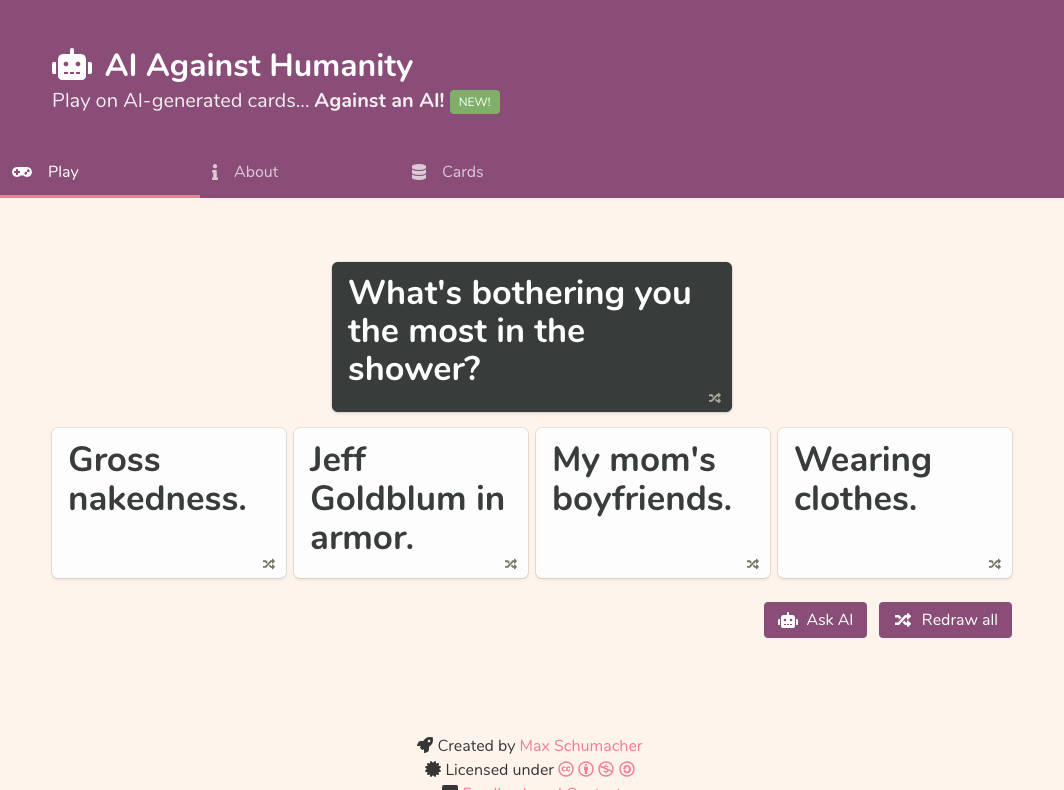

Do you like Cards Against Humanity or messed-up humor? Try out my browser game AI Against Humanity. All the cards you see were generated by a neural network. You’re also competing against another neural network! But beware: It contains some extremely strong and offensive language 😇

In this blog post, I want to detail the process of how a little experiment with a language model turned into a complete browser game including an AI opponent. I hope it can serve as a case study of how to quickly build and ship ML / web ideas.

TL;DR

- The cards were generated by a GPT-2 language model fine-tuned on Cards Against Humanity text

- The frontend to the game is built with jQuery and TensorFlow.js

- There isn’t really any backend. Everything is statically generated and then served from AWS S3

- I use a simple AWS Lambda function to keep track of user interactions

- The AI opponent uses PCA-reduced BERT-Embeddings and a small neural network to make decisions

- It was trained on aggregated interaction data of users playing the game in the early phase

The Idea

Five years ago, I wrote a blog post about letting language models write new Bible texts. The basic idea was to have a neural network read a text (i.e. from the Bible) and then make it generate new text based on that. Since then, a lot has happened in the world of language models. The two major game-changers are:

- Better model architectures for reading and writing language. Five years ago, we used RNNs. Now we use Transformers.

- Pre-training on a large corpus. Similar to computer vision, we can get vast advantages by first pre-training the model on a larger dataset and then fine-tuning it on whatever data / task we need.

Many people have written articles explaining the two, and I will not repeat here what others can do better than me. To understand the Transformer architecture, check out Jay Alammar’s The Illustrated Transformer. To learn more about the pre-trained model we use here, check out the official announcement for GPT-2 by OpenAI. GPT-2 is the current state of the art in terms of language generation.

When OpenAI released their model, I felt compelled to repeat my Bible experiments from back then. Can we create even better Bible quotes? What about other kinds of text? I fine-tuned the pre-trained model on the King James Bible, all of Trump’s tweets and some Wikipedia articles. The results were really impressive! See the appendix for examples.

This is when I saw a post on Reddit about creating Cards Against Humanity cards using a Char-LSTM. Me having the maturity and sense of humor of a 14-year-old and thus being a natural fan of CAH, I loved the idea!

If you haven’t heard of Card Against Humanity (CAH), it’s a popular card game “for horrible people”. There are black question and white answer cards. The goal is to find hilarious / messed-up combos and then to vote on them to decide whose is the funniest. It contains a lot of really graphic and messed up ideas and may not be for everyone, but I absolutely love it!

So, apologizing to u/ablacklama for taking his idea, I fine-tuned a GPT-2 model on CAH cards and checked out the results. They were hilarious! Here are some early examples:

“Farting Your Way To The Center Of The Earth.”

“Sexy grandmothers swinging by.”

“Leaning against an oak tree and taking live horses for granted.”

“A very sexy mummy.”

I was hooked. From there on, the roadmap became clear: Instead of simply letting people read these new cards in a list and forget about them the next minute, why not let them interact with them? I could build a web app where people can play a basic version of the game, all on fake cards. Then, I could collect which card combos users prefer and train a neural network on this to understand common humor. Finally, I could use this trained model to power an AI opponent!

So I went to work.

Creating the cards

Downloading and fine-tuning GPT-2 is extremely easy, fast and free thanks to OpenAI and Google’s Colab.

If you want to try it out yourself, simply start a new notebook on Colab, install the awesome gpt-2-simple package and get going. It even comes with a great example notebook.

This I applied to a dataset of Cards Against Humanity questions and answers. You can obtain all their cards as JSON from here. I noticed quickly that you need to separate the questions and answers and fine-tune two separate models. Creating good questions is much harder than random funny answer cards.

Creating the frontend prototype

The goal from the beginning was to run the whole game inside a static website and let JS do all the heavy lifting. For the prototype, I went with good old jQuery, though if the project keeps growing I will consider switching to Vue at some point. For the CSS framework, I tried Bulma, which turned out pretty nice.

Basically, I keep the pre-generated cards along with a unique ID in text files. The frontend then loads and shuffles these and shows questions and answers to the user. No biggie so far, right?

Deploying the frontend and logging user interactions

I’m a big fan of AWS S3 and Lambda. You can go really far without paying more than a dollar a month, and you don’t have to worry about dev ops at all. That’s why I chose to deploy the frontend and all data assets to S3 using s3_website.

It gets trickier when you want to log user interactions, i.e. which card combos users find funny and which they don’t. At the minimum, I needed an API that logs whatever I send to it and lets me export these logs later. Traditionally, this would require a running server, hosting costs and actually worrying about dev ops. I spent some time trying to figure out a simpler solution and finally arrived at this little hack:

A tiny Lambda function that simply logs everything it receives to stdout. I can then access all the logs in CloudWatch and download them for further processing. This works for the moment and costs me very little. That said, it’s a bit hacky and if you do have a better idea, please don’t hesitate to comment below 🙂.

Training and integrating the AI opponent

After posting this first prototype to r/ml and asking my friends to play with it for a few days, I got a few tens of thousands of interactions to work with. I keep track of several different interactions, but most important is the picking of an answer: Given a question card and four answer candidates, which answer did the user prefer?

Based on these data points, we can aggregate the choices into a mean probability for each question-answer-pair. A model can then learn the probability of playing answer A given question Q. All we need to do in the game is to take the answer with the highest probability in each case.

So far so good, but remember we want everything to work without a server. This model, too, has to work in the browser and be fast enough to provide a smooth experience on any phone or laptop.

Thankfully, we have TensorFlow.js, the awesome library that lets you train and use neural networks directly in client-side JS. If you need a starter on this, check out my blog post from last year where I explain in great detail how to detect where a user is looking at on the screen, entirely in the browser (and also backend-less).

Great, so we have the means to import a pre-trained TensorFlow model and run it on the client. But if it’s supposed to run fast on any device, it needs to be tiny! And language and humor are hard problems… So how do we solve it well enough to be funny given the constraints?

You have probably heard of BERT. We can use it to extract powerful sentence embeddings that contain a high-level representation of a given text in only 768 numbers. Wait, 768? Um, that’s still too much for our purposes, I’m afraid…

Here comes the next trick: Let’s use PCA to reduce the dimensionality of the vectors to something easier to wield. Actually, I found that just 10 dimensions is enough for a baseline and more improve the results only marginally.

So, for each pre-generated card, we extract the BERT-representation, then fit PCA on those and store these 10 values for each card in another file. Our assumption is that these embedding vectors describe the text and its content to a degree that we can then learn something from it without having to train another deep network.

The actual neural network then takes two of these embedded vectors (one for the question, one for the answer), and outputs a probability value. There’s no magic here, a single hidden layer and some input dropout worked great for me.

Back in the client, we load the model and embedding vectors from S3 and deal four cards to the AI player’s hidden hand. In each turn, we ask the model to predict the probabilities of each answer card given the question card and simply pick the best one. That’s it!

The Result

I’m really impressed with the results myself. The game is fun, the AI player has some pretty sick moves, and the generated cards are often hilarious. I keep getting messages from users who said it got them in stitches. But don’t listen to me, try it yourself:

Now, did we make an AI understand and create real humor? Probably not. GPT-2 and other language models are famous for their incoherent rambling, like a kid talking in their sleep. Exactly this randomness comes to our aid here. Because being random can be very funny. And I selected the funny ones for you to enjoy 👌. A lot of the more creative ideas probably come from some deep dark corner of the web that OpenAI used for their pre-training. Remember what Jim Jarmusch said:

“Nothing is original. Steal from anywhere that resonates with inspiration or fuels your imagination. […] If you do this, your work (and theft) will be authentic. Authenticity is invaluable; originality is non-existent. And don’t bother concealing your thievery - celebrate it if you feel like it. In any case, always remember what Jean-Luc Godard said: “It’s not where you take things from - it’s where you take them to.”

Let me know what you think and if you have any other ideas you’d like to see. Also, feel free to share the game with your non-technical friends! 🙂

Ideas for the future

- Multiplayer *

- SFW Version

- Let the model create new cards on-line *

- Multi-gap questions

* These would require a real server running in the back. Scandalous!

Postscript: How to bring Trump back from the dead

First of all, if you had a heart attack reading the heading just now: I use the Trump analogy because A) his tweets are often hilarious and B) because there is probably no Twitter user more famous and active. Just the sheer amount of his tweets almost reaches definitions of Big Data.

A while ago I was reading Dan Simmon’s mind-blowing Hyperion, a complex sci-fi novel from 1989 full of fascinating ideas. One of the many themes was that they “revived” the poet John Keats by building an AI based on all his writings. The novel is very nuanced and explores how such a reincarnation can really be similar to the original.

I can’t help but feel that we’ve actually made a step towards making that possible with language models. If you have the time, check out Appendix B with examples of Trump tweets that GPT-2 can create - without context!

Now imagine this: If instead of just learning how Trump tweets look in general, we would also pass the whole state of Twitter at the time (let’s say the last million tweets) as context. The model might learn how to comment on current events and news, reply to others’ questions, etc.

GPT-2 is still not good enough, but there’s no saying we won’t develop a model at some point that is powerful enough to approach Trump’s persona to a high degree. If so, we could keep Trump alive on Twitter forever, couldn’t we?

Appendix A: Bible Quotes

Fine-tuned on the King James Bible.

Unconditioned:

10:31 And the LORD said unto Moses, This garment shall not be taken away from thee; neither shalt thou make any abode for thee among the children of Israel.

10:32 And the LORD said, Thus will I do unto you, that I will make you clean to do this, if thou wilt not take the garment, and put it on his head.

10:33 And the LORD said, Thou shalt not put it on the other his head: and he shall take it on his head.

See the numbering? That wasn’t working with LSTMs.

7:19 He sat upon a couch with his feet upon the ground, and he lifted up his eyes unto heaven, and looked upon me.

Ohhhkay?

And they had a king in Jerusalem, named David, who was a man of great stature, and full of honour, and had a crown of gold, and had the name of Solomon.

This sounds like an almost perfectly coherent quote, doesn’t it? I checked, it does not appear in the original text. Also, David was Solomon’s father, so the last part doesn’t make sense. Otherwise, impressive!

Conditioned (query in bold):

And the LORD called Donald Trump a prophet, and he is the son of Abraham, and the LORD has raised him up to the throne.

23:28 And they said unto him, Behold, thou hast been an example for all the fear of the LORD.

🤯

And the LORD farted and made a great noise, and the land was shaken.

Bahaha

And the LORD said unto him, my favorite color is purple; it is not the same as blood.

Bold choice.

A man who lies with a man, as one lying with a woman, is both holy and blameless.

Yay, go God!

Appendix B: Trump Tweets

Because why not? Obtained from the Trump Twitter Archive. Preprocessed by replacing URLs and kicking out all tweets that have less than 100 retweets.

Unconditioned:

I am in no way qualified to be the commander-in-chief of the GREAT UNITED STATES.

Dial back self-awareness by about 90%.

I am a very happy man. I’m a great guy, I’m smart, and I’m a winner!

Is that you, Donald?

Conditioned (query in bold):

I want to sleep with my daughter

Ouch!

I want to have sex with all the girls at the Miss USA pageants.

Yikes, how can the model know him so well??

Finally, I asked Mr. Trump what the true meaning of Covfefe is:

The true meaning of covfefe is

- that it’s a great word!

- that I am not happy!

- “If you can’t stand the truth, who can?”

- that I’m very glad I didn’t lose to Donald Trump in a Miss Universe pageant. I’ve had enough of the phony media!

Appendix C: Wikipedia Articles

Same as in Andrej Karapathy’s original post, we use a tiny subset of Wikipedia to train. It has quite a bit of XML code in it, but the largest chunk should be pure Wikipedia text.

Unconditioned:

Game of Thrones is in the process of acquiring its first female actor.

British-Icelandic television series will feature Tyrion Lannister as the father who leads the army following the death of his beloved wife Catelyn Stark. The character will play Tyrion’s firstborn son (and heir). And in a surprise twist, the show will also feature Cersei Lannister as Cersei’s rival husband. HBO has hired former director of photography Jon Ronson to direct the series according to Variety.

Bahaha. This doesn’t seem very Wikipedia-ish, maybe some artifacts from the untuned GPT-2?

The term “physicist” was originally a way to describe individuals whose job was investigating the behavior of subatomic bodies. But in the context of physics (and especially physics-based medicine) one is usually better described as someone whose job was to create a world of physics.

🤔

A radical criminological approach to violent crime has been championed by the criminologist Donald A. Hackett, former Director of the National Institute of Justice. Products of his study include the phrase “The criminological approach”: the study of crime as a system study from der tragär (state-inside affair) to serial attack.

At just 2.2 years old, the study of crime came under the critical microscope by the French Deputy Prime Minister, Christophe January, who launched his institutional “suicide police” program; and in 1977 by President Ronald Reagan. The Gun Control Act of 1968 further legitimised the concept. Discrimination against the civilian population would eventually eventually lead to the justified fears of FBI agents, who no doubt regarded Carmans as dropped devourers of maternally deviant students into and beyond the university.

You can see the samples are not terribly coherent over longer paragraphs. But already much better than Karpathy’s original samples.

Conditioned (query in bold):

Machine Learning is one of the oldest and most advanced methods for training any machine, and one of the fastest methods of learning itself (http://www.informatik.net/Articles/machine_learning/). The main goals of machine learning are: 1. to predict the future by using knowledge obtained from the past; 2. to do automatic tasks to determine what things and systems are appropriate; 3. to create new things or new systems.

Note the fake URL.

Breathing is still the leading cause of morbidity and mortality in Australia, but for the first six months (2004/05 to 2005/06) it has fallen from the top of the list in all countries surveyed.

Better stop now!

Nickelback are often viewed as a cult classic, perhaps most notably in the form of the rock’n’roll soundtrack to the film “Candy Crush Saga.”

I had no idea!

When the US Navy attacked South-West-Portugal in 2005, the US government was very concerned that the Portuguese would respond in kind. Thereafter, the US sent a group of warships to the area to act as “battlers” against the Portuguese. The first group of ships headed to North-West-Portugal. On July 4, 2005, the US Navy attacked South-West-Portugal in a military exercise. By July 15, the US-backed naval blockade had been lifted. This had not been an easy decision for the US.

Fake news, I guess :)

Scientifically, brain farts are thought to be about 2-6% of all human excretions.

I knew it!

Christiana Dakoto McCarthy II was born in St Louis, Missouri on March 15, 1847. Her father, a tailor, and her mother, a professional nurse, had been separated at the age of 15, when her father, a prominent merchant, married a rich seamstress and raised her two sisters. Her mother and her sisters were all married to white businessmen. She worked as a maid, and became a skilled carpenter and handyman. In 1902 she married her first husband, a farmer, and had many children.

Wow! Fake biography of a fake person. The only weirdness is the year of her first marriage: In 1902, she should have been 55.

Drklav, pronounced ˀšɾlav, is the name of the last child (in the original Greek script) of Zeus. Zeus is the god of agriculture, and as the youngest father of the gods he is also the father of the race that produces the best grain.

Alexander the Great’s darkest secret was his obsession with immortality. In the fourth century B.C.E., in a time when he was on the verge of losing his head [or at the very least] his life, he built his kingdom at the base of Mount Olympus and raised the giant Olympus Athena as his divine bride for him. In the process he gained superhuman strength.